Event Details:

Tuesday, February 15, 2022

8:30am - 9:30am PST

This event is open to:

General Public

Free and open to the public

Tuesday, February 15, 2022 [Link to join] (ID: 996 2837 2037, Password: 386638)

- Speaker: Luke Keele (University of Pennsylvania)

- Discussant: Iván Díaz (Cornell University)

- Title: So Many Choices: The Comparative Performance of Statistical Adjustment Methods

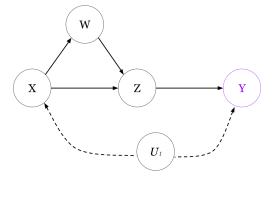

- Abstract: Much evidence in applied research is based on observational studies where investigators assume that there are no unobservable differences between the groups under comparison. Treatment effects are estimated after adjusting for observed confounders via statistical methods. However, even if the assumption of no unobserved confounding holds, bias from model misspecification may be significant. Traditionally, regression models of various kinds have been used to adjust for confounders. Such models impose strong functional form assumptions that are most prone to model misspecification. In the causal inference literature, there has been considerable effort on the development of more flexible adjustment methods. In fact, there has been an explosion in the number of methods that can be used to adjust for observed confounders. Now investigators can choose between many forms of matching, weighting, doubly robust methods, and a variety of machine learning based estimators. The general trend has been to move toward flexible methods of estimation. Specifically, most recent work has sought to combine methods from machine learning with a doubly robust framework. While these methods have clear theoretical advantages, they see little use in the applied literature. Moreover, the development of guidelines for applied researchers has been limited. In this presentation, I review key concepts related to functional form assumptions and how those can contribute to bias from model misspecification. I also review the logic behind why machine learning methods have been so widely proposed for estimation and review the strengths and weaknesses of these methods. I present two case studies where I seek to recover experimental benchmarks using observational study data. In these case studies, I compare the performance of a wide variety of methods for statistical adjustment. I find that several widely used methods are subject to bias from model misspecification. I also find that while machine learning methods are among the strongest performers, they are not always reliable.

Related Topics

Explore More Events

-

Lecture

Data Feminism for AI

-John A. and Cynthia Fry Gunn Rotunda, E241 at the ChEM-H / Neuro, 290 Jane Stanford Way, 2nd floor, Stanford, CA 94305 -

Conference

2024 Stanford Data Science Conference

-Paul Brest Hall (Law School), 555 Salvatierra Walk, Stanford, CA 94305 -

Causal Science Center

Bay Area Tech Economics Seminar

-John A. and Cynthia Fry Gunn Rotunda, E241 at the ChEM-H / Neuro, 290 Jane Stanford Way, 2nd floor, Stanford, CA 94305